This is an in-depth review of how synyx GmbH & Co building is using Turing Pi board in combination with GitLab CI and Ansible for their CI/CD needs

Author: Karl-Ludwig Reinhard

Introduction

In my previous article, I explained some cloudy statements about Ansible and Gitlab CI and that we’re going to work with a Turing Pi for that purpose. I already talked about the capabilities of this amazing board and the first steps it takes to get it up and running. Today I’ll go a little bit into details on what benefits we are having when running Ansible scripts in a CI environment and most important where the Turing Pi helps us.

What we’re going to build with it?

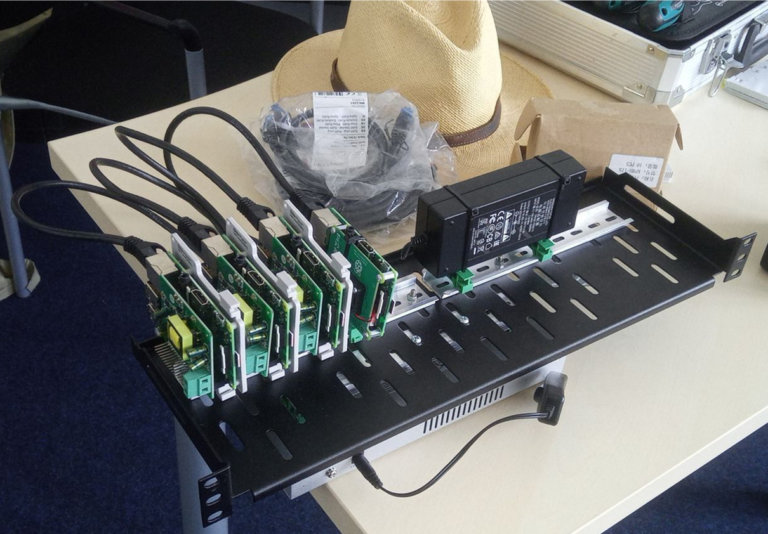

In our project, we have a lot of Raspis and configure them all with Ansible. From time to time we are adding new features and fixing bugs in our Ansible scripts and that makes them more complex. We are using GitLab to review our code, but what is missing is a validation of the syntax and that the code will actually work on production’s hardware. Considering that our team continues to grow, this adds more complexity. So we put 4 Raspberry Pis into a rack and integrated them as a deploy target for our merge requests.

When a user opens a merge request, we reboot the machine and run the OS via the network. With that, we can flash the SD card with a fresh Raspbian. After a reboot, we can start our Ansible scripts to test if the merge request is sane.

With just running an Ansible Playbook for setting up hosts from 0 to 100 we get the following benefits:

- Check if the syntax is correct

- Ensure that all needed files are committed

- Can different configuration flavors still be applied?

Why do we need netboot at all?

Our goal is to run our Ansible script against a freshly installed Raspbian. We cannot be sure, that the last tested merge request was flawless. The idea is to reboot the node into an NFS based root file system. From there we can flash a Raspbian and reboot into a clean OS. After that, we can apply & test our Ansible scripts. Following this process guarantees, that every merge request gets a clean system without any leftovers from a previous run.

Setting up Gitlab CI/runner

To be honest – we’re currently stuck with a shell runner. We weren’t able to find a proper working docker image with ansible that doesn’t lack some tooling/libs that are currently required. Building a custom docker image would be possible but for the sake of simplicity, we use a simple shell runner.

First of all, we need to set up one node as a DHCP/TFTP boot server. Here is a good tutorial on how to do this. We recommend to run one of the Turing Pi nodes as a DHCP/TFTP boot server – it’s more reliable than a server placed outside of the board.

One thing to note: The DHCP server is located behind a NAT router so it does not interfere with DHCP servers in our company network.

When the server is up and running, we need to set up the Gitlab runner.

- Due to the fact we rely on a shell runner Ansible has to be installed by hand. This can be done with your package manger. Also, install all dependencies that are needed for a successful Ansible run.

- Install GitLab CI runner. When asked which kind of runner, choose shell runner.

- Add a custom inventory.

You can use your regular inventory and add there your ci host. Then you have to limit your Ansible run with–limitoption. If omitted you’ll most probably deploy your prod system with every merge request ? - Add or change

.gitlab-ci.ymlaccordingly:

stages:

- test-on-pis

test-on-turing-pi:

stage: test-on-pis

script:

- export ANSIBLE_HOST_KEY_CHECKING=False

- mkdir $HOME/.ssh || true

- echo -e "Host 192.168.2.*\nStrictHostKeyChecking no\nUserKnownHostsFile=/dev/null" > $HOME/.ssh/config

- ansible-playbook reflash.yml -i hostsCI.yml -e "ansible_ssh_pass=Bai9iZaetheeSee5"

- ansible-playbook initial.yml -i hostsCI.yml -e "ansible_ssh_pass=raspberry"

- ansible-playbook site.yml -i hostsCI.yml -e "ansible_ssh_pass=Bai9iZaetheeSee5" -e "http_proxy="

except:

- master

We do some „dirty“ SSH tricks at the beginning. This ensures that Ansible can connect to the systems even when the host keys have changed. That will happen with our setup after we reflashed the system. We tried to do this during an ansible run but weren’t successful.

Reflashing your Pi is really straightforward when the PXE server is setup. Let’s have a look at some excerpt:

--

- name: Check if already pxe boot

command: "/bin/bash -c \"findmnt / | awk '{ print $3}' | tail -n1\""

register: root_mnt

- name: Destroy boot partition

command: sudo sh -c 'ls -lha > /dev/mmcblk0p1; exit 0'

when: root_mnt.stdout_lines[0] != "nfs"

- name: Reboot the machine into pxe

reboot:

when: root_mnt.stdout_lines[0] != "nfs"

....

- name: Flashing plane os to sd (gzip)

command: sudo sh -c 'zcat /image_archive/{{ os | default("raspbian.img.gz") }} > /dev/mmcblk0'

ignore_errors: yes

- name: Reboot the server

become: yes

become_user: root

shell: "sleep 5 & reboot"

async: 1

poll: 0

- pause:

seconds: 40

- name: Wait for the reboot and reconnect

wait_for:

port: 22

host: '{{ (ansible_ssh_host}}'

search_regex: OpenSSH

delay: 60

timeout: 120

connection: local

- • First of all, we check if the current system is already running on an NFS root filesystem. If so we can skip the next two steps. If not we destroy the current boot partition – just write some rubbish on the raw device.

- • Next, we reboot the system.

- • Then we flash the sd card/eMMC with the help of Zcat. We compress our images to save some HD space and be able to transfer them via a wan connection(if needed). The performance impact is negligible. One reboot later and that’s it. We have a freshly installed Raspbian and can apply our Ansible scripts to a fresh system.

Two things to note:

- • We ignore errors when flashing. When the image is missing or similar, we just continue and reboot the system. In most cases, the system will be still accessible

- • I couldn’t get the reboot module working properly. I think the newly flashed system does not have the same remote user and password as when we started the role. With this ugly part, it’s working ?

Be aware that the above script is an excerpt from the main.yml of the corresponding role and is not complete!

What we also do

After a successful run, we apply some smoke tests. One flavor of our RPis is kiosk systems that start X11 and opens a chromium browser in fullscreen mode. With some „technical creativity“(aka mocking) we ensure that the browser is running. When this is not going to happen, the build fails. Another flavor is used for controlling an industrial actor and its basic functionality is also tested at the end. We do not test every aspect, it’s more of a kind of smoke tests or checks if certain TCP ports are open.

What we also want to know how our Ansible scripts affect a running system that is not flattened or rebooted on every run. We deploy two node continuously when a merge to master happens. With that, we can also monitor the memory usage on these systems and can identify possible memory leaks or stability issues when running a system longer time. It has served us well as an additional safety net.

Why the Turing Pi is ideal for us

Compared to our old setup we gained speed and stability of our builds. In the past, we had problems when booting multiple pis in parallel from the network. PIs were stuck in the boot process and didn’t get their kernel via TFTP or couldn’t mount the NFS root reliably. To this day, I’m not sure where I went wrong – I fixed it by throttling the reboots so that the Pis are booted one by one. That slowed down the „build“ significantly.

With the Turing Pi we did not encounter any problems booting pis in parallel from the network at the same time. So after testing it a bit I removed the throttle and reduced the build time significantly

Another thing we see a lot less stuck Ansible jobs because the network connection is flaky. I got the impression, that working with the network on the PCB is a lot more reliant. We use Turing Pi for two weeks as a replacement for our old „test cluster“ – can’t remember that I had to reset the cluster manually caused by a frozen job.

Currently, we work most of the time from home – Covid19 case numbers are rising in Germany and it looks like we’ll stay at home for some time. With some VPN and network magic, we can use some nodes as test devices from the remote. In the past, everybody got his „personal“ test pi, had to ensure proper network connection, flash the sd card manually, etc. Yes, you can use Raspbian X86 but some things like accessing Bluetooth or GPIOs cannot properly be tested in a virtual environment.

What we’ve learned as a team?

In our last review and retro, we talked also about our CI setup. We’ve agreed, that it helps us a lot in our day-to-day business and adds to the quality of our merge requests. For me personally, it is a very good incentive to use more merge requests so the CI pipeline is triggered and I am forced to work more in a clean way. But it takes time to set it up, stabilize some (ugly) parts – but after all the time investment is worth it. Additional tests and reviews improve the quality even in the context of configuring an OS and reduces the number of errors.

What we miss with Turing Pi

Not much to be honest. Two things would be really awesome:

- Switching the HDMI output from the first node to a different node via i2c

- For flashing EMMc the first slot only can be used. It would be great if you could also use the other slots for flashing as well.

I think achieving these two points isn’t easy and for us not really pain points ?

Where we (maybe) want to go

Currently, we are deploying our prod systems still from our workstations. Continuos delivery will be a topic for us. But something like „merge on master“ and deploy the prod system instantly is too risky for us. Our Pis are an integral part of our customers. And that’s not the definition for CD(for me :)). Our pis are not easily accessible in a matter of minutes and are distributed across several hundreds of kilometers. We do have strategies to mitigate this problem – but we want to be thoughtful about this.

Some kind of A/B deployment or a time triggered deployment could reduce the risk of stopping all subsidiaries at once, but we need time to think about and be patient ?

Another point is scaling up. Currently, we allow only one job to be run, because we don’t have enough nodes. But I’ve ordered another Turing pi board! Besides that, we already have some RPi4 in production. Turing Pi plans to start to develop a CM4 compatible board after the next batch of boards is completed. Most likely we’ll order one.

Thanks a lot, Turing Pi Team – your product helps us with every merge request.