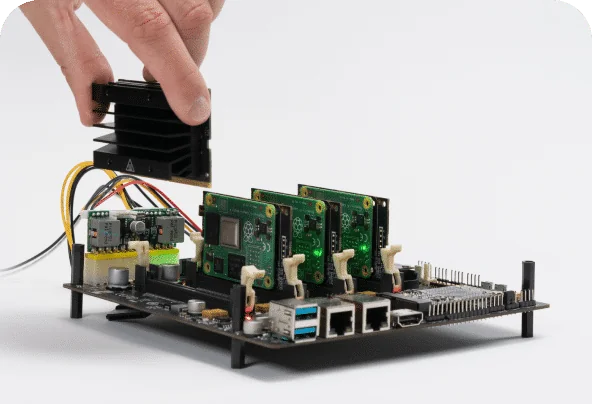

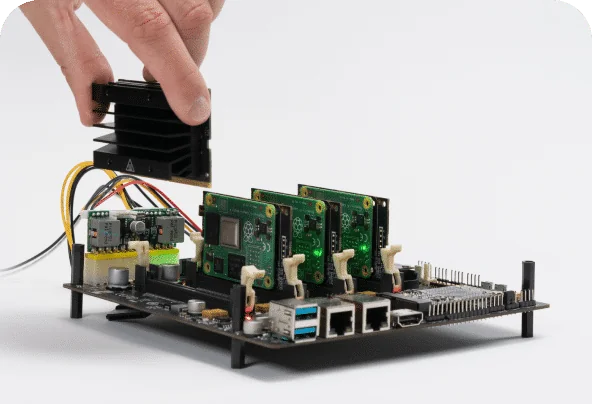

Turing Pi 2.5 Cluster board

Edge AI

Inference when low latency and cost are critical

Self-hosted

Development

Quickly set up Kubernetes and Machine Learning stacks using the built-in BMC, CLI and Turing Pi open-source firmware. Take advantage of authentication, serial console over LAN, OS image flashing, self-testing, and OTA updates.

$ tpi ‐‐node

$ tpi ‐‐power

$ tpi ‐‐usb

$ tpi ‐‐uart

$ tpi ‐‐flash

$ tpi ‐‐usb=host ‐‐node=1

$ tpi ‐‐upgrade=/sdcard/bmc.swu

$ tpi ‐‐power=off